Setting Up GPU Support (CUDA & cuDNN) on Any Cloud/Native Instance for Deep Learning | by Ashutosh Hathidara | Medium

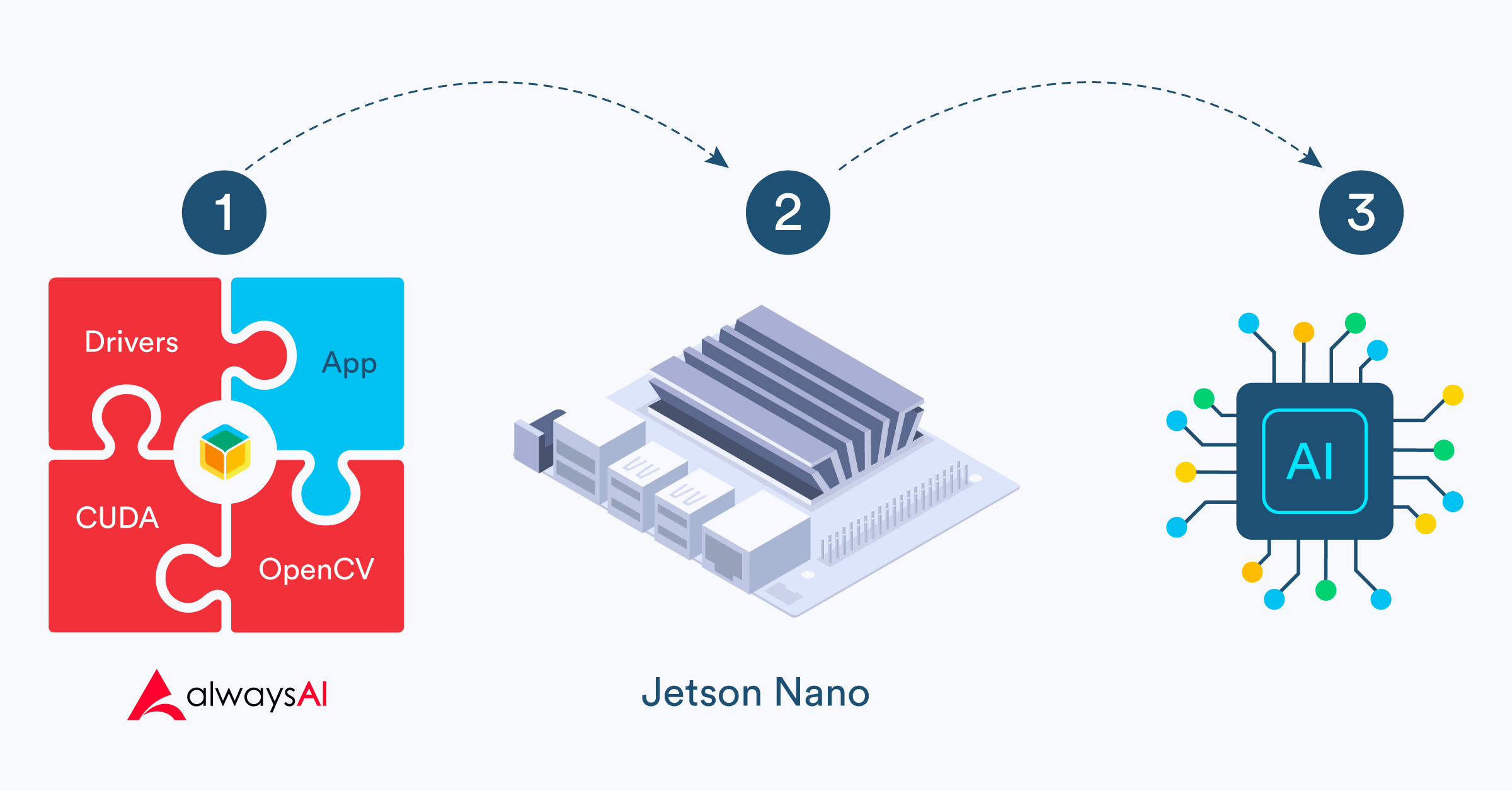

Installing CUDA Toolkit 10.0 and cuDNN for Deep learning with Tensorflow-gpu on Ubuntu 18.04+ LTS | by Aditya Singh | Medium

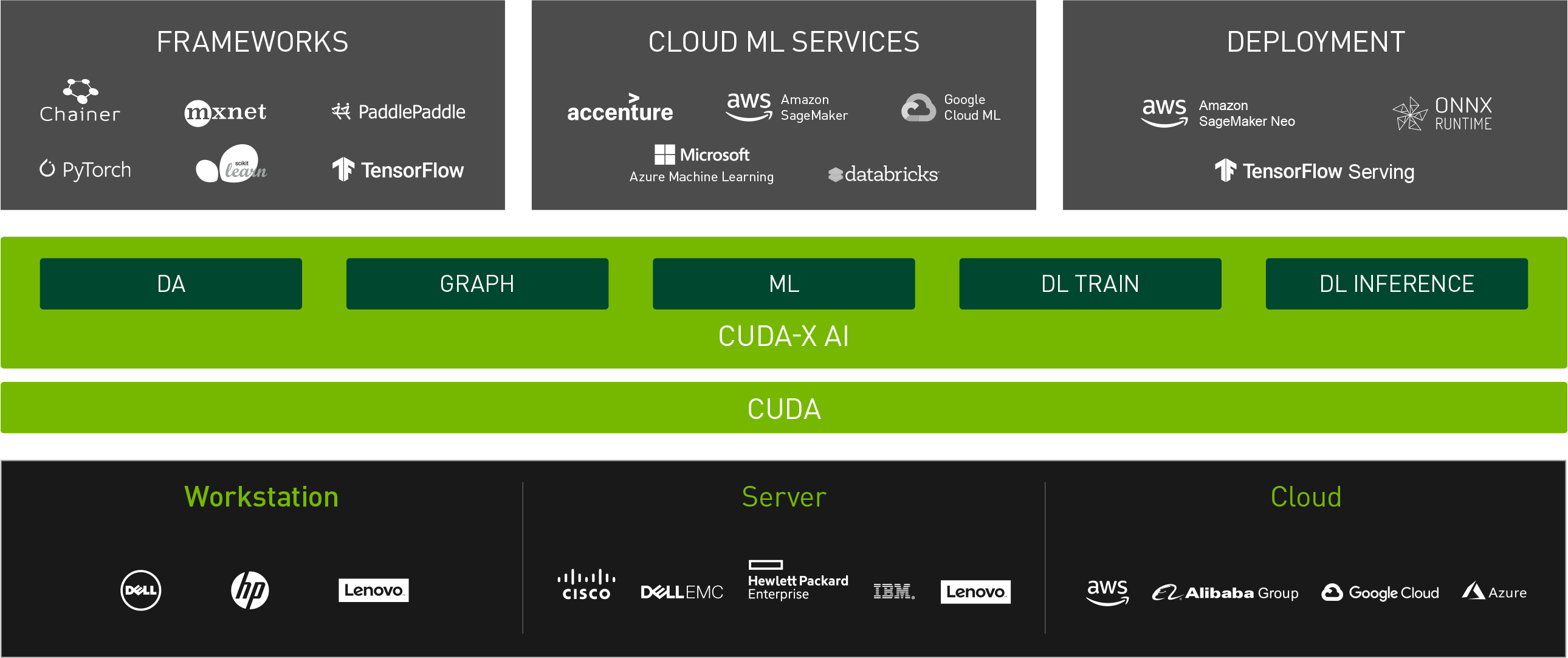

AIME Machine Learning Framework Container Management | Deep Learning Workstations, Servers, GPU-Cloud Services | AIME

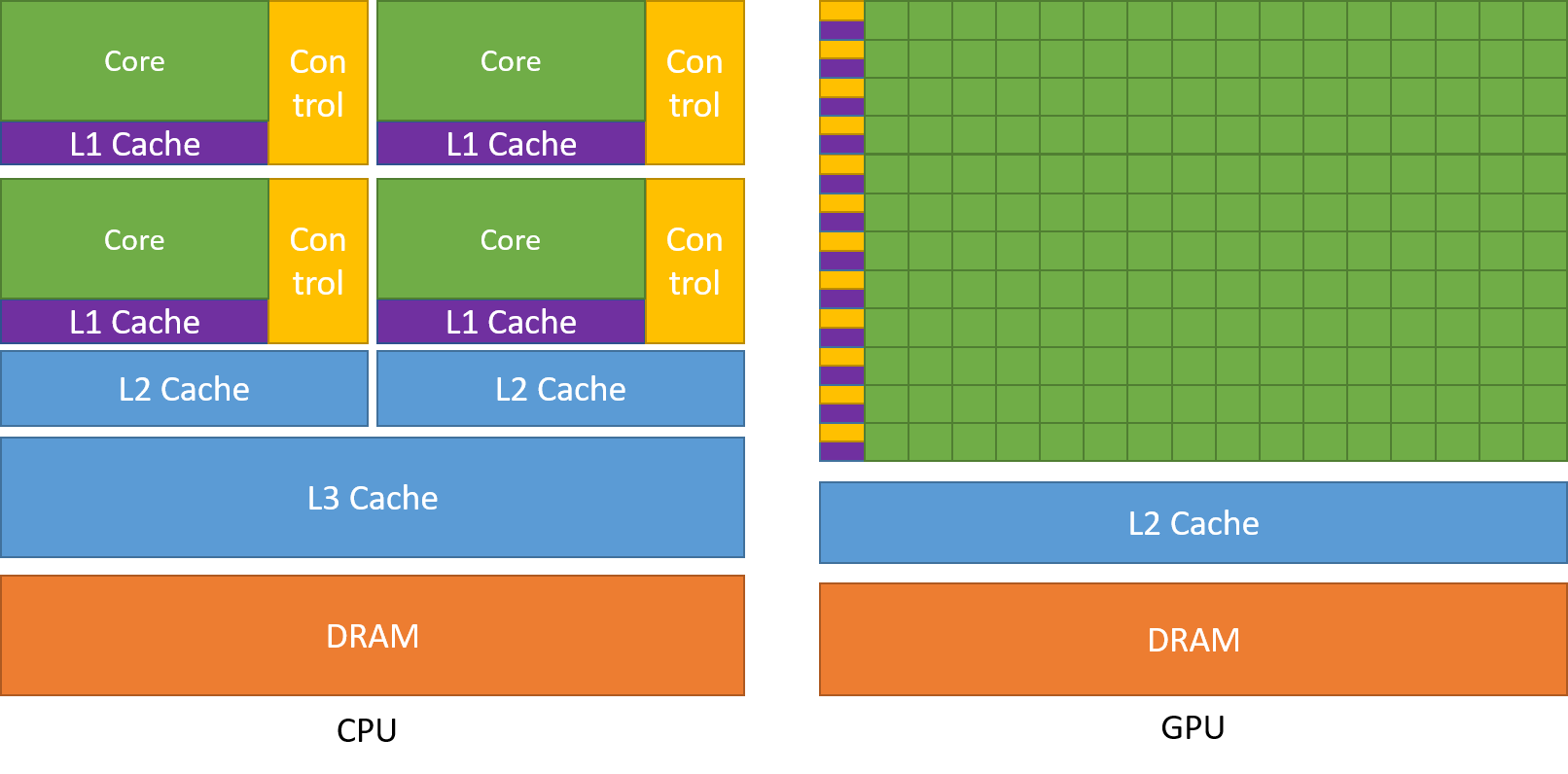

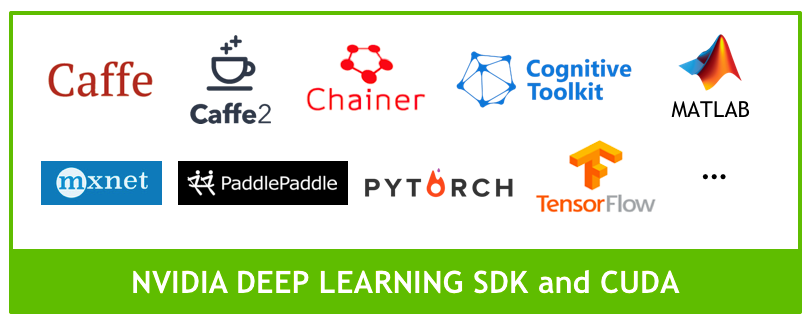

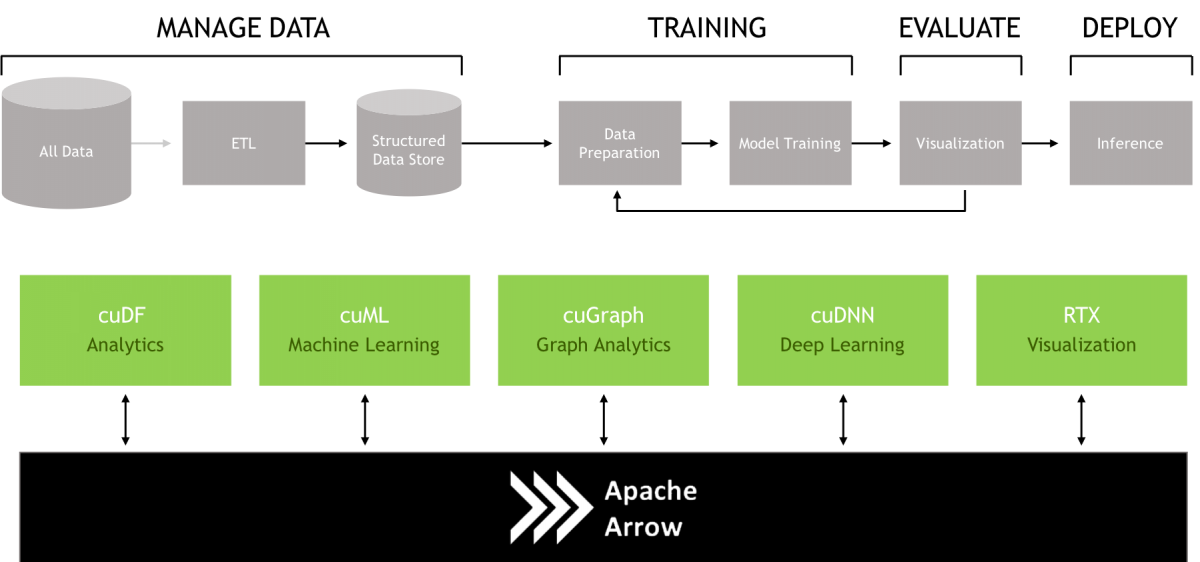

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

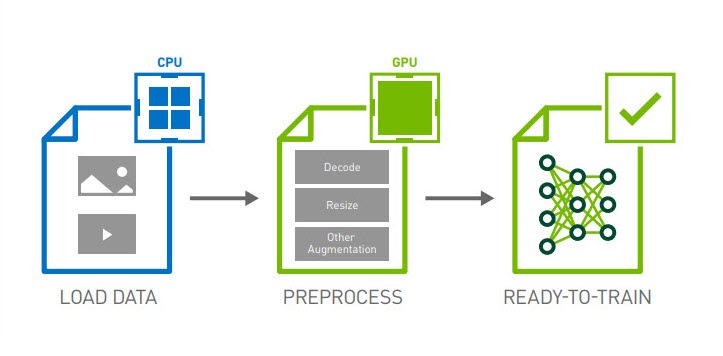

Sharing GPU for Machine Learning/Deep Learning on VMware vSphere with NVIDIA GRID: Why is it needed? And How to share GPU? - VROOM! Performance Blog

![N] HGX-2 Deep Learning Benchmarks: The 81,920 CUDA Core “Behemoth” GPU Server : r/MachineLearning N] HGX-2 Deep Learning Benchmarks: The 81,920 CUDA Core “Behemoth” GPU Server : r/MachineLearning](https://preview.redd.it/43a9oi0asug31.png?width=1280&format=png&auto=webp&s=e261587dfffdfeb181cf26fe6515def62c6a08db)

![HowTo] Installing NVIDIA CUDA and cuDNN for Machine Learning - Tutorials - Manjaro Linux Forum HowTo] Installing NVIDIA CUDA and cuDNN for Machine Learning - Tutorials - Manjaro Linux Forum](https://forum.manjaro.org/uploads/default/original/3X/3/7/37c290e86397bd15fd33c4ff944945f3174d473c.png)